Supposed enhancements through the presence of Artificial Intelligence (AI) can increase user expectations towards self-perceived interaction efficiency. User expectations are a prerequisite for placebo effects that can undermine the validity of human-AI evaluations and individual risk-taking behavior. We evaluated this hypothesis in two user studies.

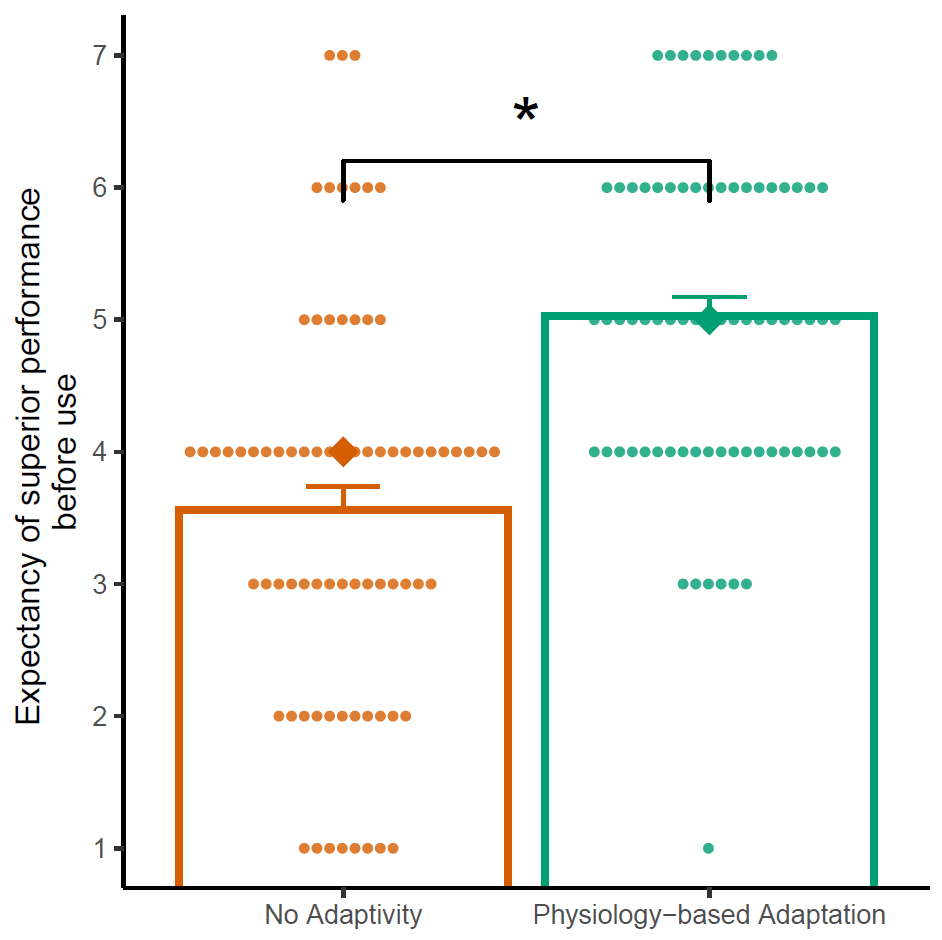

To confirm changes in user expectations and, therefore, the presence of placebo effects during the interaction with AI systems, we designed a study where participants solved word puzzles with different difficulty adaptations [1]. A narrative primed participants that they were interacting with an AI adapting the word puzzle difficulty based on performance or facial expressions. A third narrative explained that no adaptation occurred, and the word puzzles were displayed in a randomized order. However, the system did not adjust the word puzzle difficulty, and all participants were confronted with randomized word puzzles.

We probed the user expectations before and after the interaction, showing that manipulating user expectations worked before and posterior to the interaction. Participants were believed to perform significantly better using AI adaptations than not receiving support. This impacted the subjective and objective performance as well.

Our results show that the community must rethink how to evaluate human-AI interaction. The research community falls back on human-computer interaction methods, which are not suitable for human-AI evaluations.

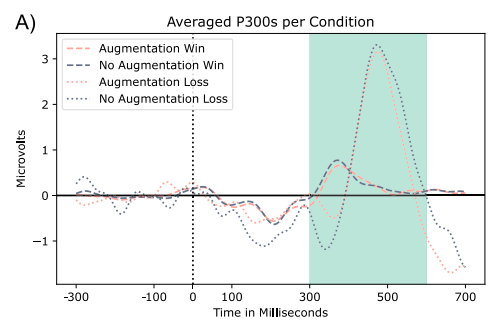

A follow-up study exemplarily shows the implications of the example of risk-taking behavior. In a user study, we show that participants who an AI augments are willing to take more risks, although the AI is a sham and not contributing to augmentation at all [2]. Measuring electroencephalography, an assessment modality for cortical activity, during the experiment, we find potential features that can be used to detect perceived conflicts during the interaction with human-AI systems, such as the P300 or N400.

How can AI placebos be sensed and controlled? Together with LMU Munich and Aalto University collaborators, we are currently working on these exciting research challenges.

[1]: Kosch, T., Welsch, R., Chuang, L., & Schmidt, A. (2023). The Placebo Effect of Artificial Intelligence in Human–Computer Interaction. ACM Transactions on Computer-Human Interaction, 29(6), 1-32.

[2]: Villa, S., Kosch, T., Grelka, F., Schmidt, A., & Welsch, R. (2023). The placebo effect of human augmentation: Anticipating cognitive augmentation increases risk-taking behavior. Computers in Human Behavior, 107787.