We are happy to announce that we will present two papers and two posters at this year’s CHI in Honolulu. We are looking forward to meet and discuss the research results with the HCI community. I’m looking forward to see many familiar faces again :). Read on if you are interested in a brief preview of our work:

BrainCode: Electroencephalography-based Comprehension Detection during Reading and Listening

Ever read a word in a foreign language that you did not understand which you had to look up? Ever wondered if there is a way to detect if you do not understand a word to provide a real-time translation? In our paper “BrainCode” we evaluate specific electrical potentials from brain signals to estimate if a word in a foreign language is known by the user or not. By looking at specific features of electrical signals, we are able to predict unknown and known words with an accuracy of 82.64% during listening and 87.13% during reading. Our findings allow interactive systems to be aware of the user’s language proficiency, such as the implicit creation of vocabulary exercises. You can find the paper here.

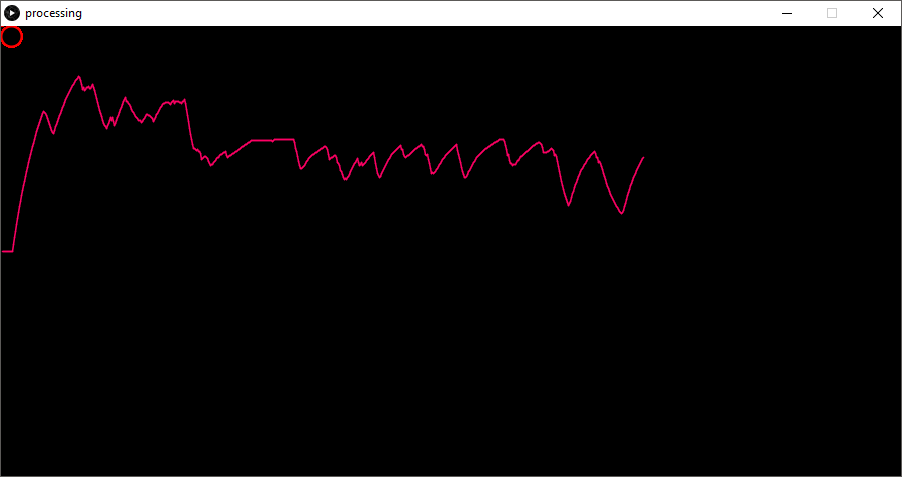

One Does not Simply RSVP: Mental Workload to Select Speed Reading Parameters using Electroencephalography

Rapid Serial Visual Representation (RSVP) has become a popular method to read text on displays with limited screen space. Presentation Speed and Text Alignment pose two decisive design parameters that influence how efficient and cognitively demanding text is read. In this paper, we reflect on the use of Electroencephalography (EEG) to estimate brain activity as measure for the currently perceived cognitive workload. Furthermore, we show how the current gain in reading speed can be predicted using EEG alone. Interactive RSVP systems can use our results to predict the current reading speed gain and cognitive workload to adjust the temporal and spatial RSVP parameters in real-time. You can find the paper here.

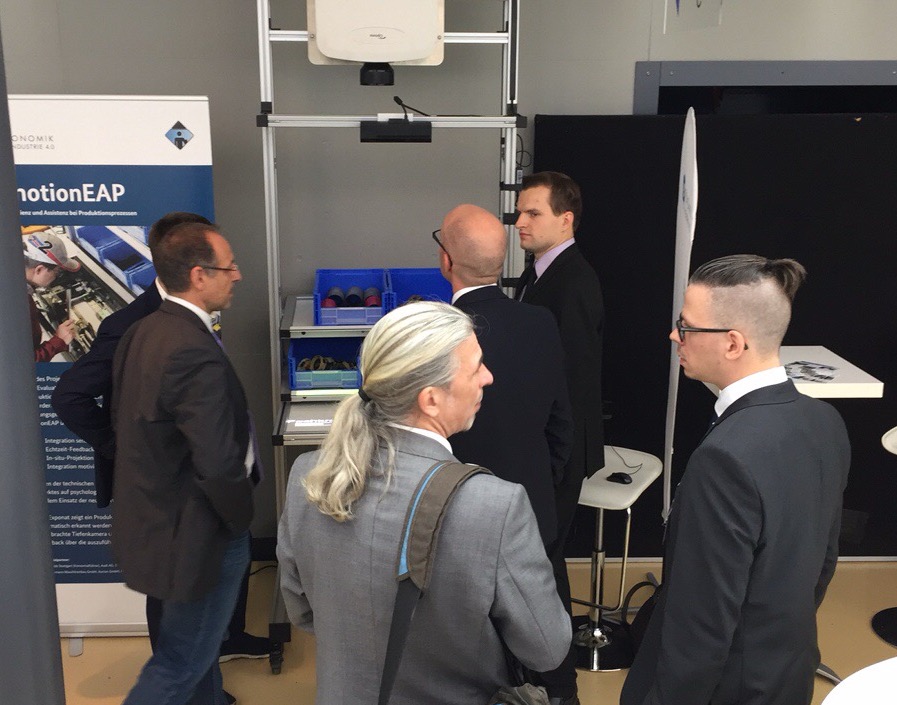

What Does the Oscilloscope Say?: Comparing the Efficiency of In-Situ Visualisations during Circuit Analysis

The rapid adoption of new Augmented Reality (AR) systems in teaching facilities changes how students interact with learning material. In this poster, we investigate how data visualizations are perceived using AR systems. We pick an electrical circuit analysis task in which students are asked to investigate electrical connections using different AR systems. We find that in-situ visualizations reduce cognitive workload while tablet-based visualizations reduce the overall task completion time. If you’re interested, you can find the whole manuscript here.

Opportunities and Challenges of Text Input in Portable Virtual Reality

Virtual Reality (VR) has become more and more commonplace in the consumer market. With this, mobile VR, such as it can be found on smartphones, has become more prominent among users. Typing in VR is one important interaction modality that has been scarcely researched. In this poster, we explore the potential of typing in mobile VR scenarios. If you want to know how to efficiently type in mobile VR, have a look here.